This publication is one in a series to support parliamentarians at the start of the 43rd Parliament. It is part of the Library of Parliament’s research publications program, which includes a set of publications, introduced in March 2020, addressing the COVID-19 pandemic. Please note that, because of the pandemic, all Library of Parliament publications will be released as time and resources permit.

While there is no universally accepted definition of “autonomous weapon system,” (sometimes preceded by the word “lethal”),1 the term generally refers to a weapon system that has two characteristics: it can operate without human intervention; and it can select and attack targets on its own in accordance with preestablished algorithms. Such a system is an advanced application of deep learning, which is a form of artificial intelligence.2 Artificial intelligence that is based on deep learning involves a machine creating its own algorithms after being exposed to enormous amounts of data and making decisions based on these algorithms.

This publication considers selected implications of autonomous weapon systems for international security and for Canada. In particular, it discusses the definition of the concept of autonomy in relation to weapon systems and provides an overview of the current status of the related technology. Lastly, it identifies potential benefits and some concerns regarding autonomous weapon systems in the context of international security and describes both the international campaign to ban such weapon systems and Canada’s approach to the issue.

Autonomous weapon systems can take numerous forms and can be of various sizes. Moreover, they can operate in the air, at sea3 and on land.4 As well, they can function individually or as a “swarm.” A swarm is a coordinated group of similar unmanned systems tasked with carrying out a common objective and that operate while maintaining constant communication with one another.5

Just as a self-driving car resembles a car operated by a driver, autonomous weapon systems are distinguished from traditional weapon systems more by their software than by their hardware. Unmanned aerial vehicles (UAVs, or aerial drones) and other types of unmanned systems that are remotely controlled by a human operator are not considered autonomous weapon systems. That said, future advances in software might allow existing unmanned systems to operate autonomously.

Weapon systems can also be categorized along a spectrum of autonomy. In general, observers have identified three levels of autonomy:6

These levels of autonomy are not static; the human operator can instruct the weapon system to switch into an operating pattern that is more or less autonomous depending on the context.

While many advanced weapon systems increasingly incorporate various elements of autonomy, there is no agreement among experts – or among countries – as to whether or not a fully autonomous weapon system currently exists.8

While the Government of Canada has no official definition of “autonomous weapon system,” the Department of National Defence (DND) defines “autonomous systems” as “systems with the capability to independently compose and select among various courses of action to accomplish goals based on its [information] and understanding of the world, itself, and the situation.”9 This definition is based on that developed by the United States (U.S.) Defense Science Board in 2016.

Countries that define the term “autonomous weapon system” do so in various ways. For example, in the U.S., a Department of Defense directive on “autonomy in weapon systems” defines the term as “a weapon system that, once activated, can select and engage targets without further intervention by a human operator.”10

In the United Kingdom (U.K.), the Ministry of Defence defines “fully autonomous weapons systems” as “machines with the ability to understand higher-level intent, being capable of deciding a course of action without depending on human oversight and control.” As well, it states that such weapon systems do not yet exist and are “unlikely to [exist] in the near future.”11

France’s definition of the term includes the notion of no human supervision or control from the moment of activation, and the capability to act beyond the parameters established by human operators in order to pursue a goal. Based on this definition, the country states that lethal autonomous weapon systems do not yet exist, although it does not consider any sort of exiting unmanned aerial vehicle or missile to constitute an autonomous weapon system.12

Cyber weapons that select and attack computer systems based on certain criteria but without explicit direction could also be considered autonomous weapon systems.13

The variability in definitions of “autonomous weapon system” across countries and international organizations may present challenges if the goal is to develop an internationally accepted definition of the term in the future.

According to the Stockholm International Peace Research Institute, countries known to be developing weapon systems with various autonomous features include China, France, Germany, India, Israel, Italy, Japan, Russia, South Korea, Sweden, the U.K. and the U.S.14 From a global perspective, the U.S. military has been leading the development of autonomy in a range of applications, especially since the 2014 Defense Innovation Initiative. This initiative emphasizes the importance of technological advances, including in the area of autonomy, for the U.S. military to maintain its advantage over potential adversaries.15

Existing weapon systems have varying degrees of autonomy. For example, the “Harpy” is a one-time-use autonomous UAV developed by Israel Aerospace Industries.16 Once launched, it circles over a pre-determined area and can detect and attack enemy radar systems without any human intervention, which is why it is referred to as a “fire and forget” type of weapon system. According to the Bard College Center for the Study of the Drone, the Harpy has been sold to China, Germany, India, South Korea and Turkey, among other countries.17

The “Super aEgis II,” which is an anti-personnel autonomous sentry gun developed by DODAAM in South Korea, can identify and fire on human targets after being aimed in a specific direction. After selecting an incoming target and delivering a series of warning messages, the Super aEgis II requires a human operator to input a password before firing. DODAAM has sold the Super aEgis II to Qatar and the United Arab Emirates.18

A third example is the “Brimstone” guided missile that was developed for the U.K.’s Royal Air Force. It can be launched from a fighter aircraft or, in its “Sea Spear” variation, from a battleship. In its autonomous mode, the Brimstone targets and fires on vehicles in a small designated area. It has been sold to Saudi Arabia.19

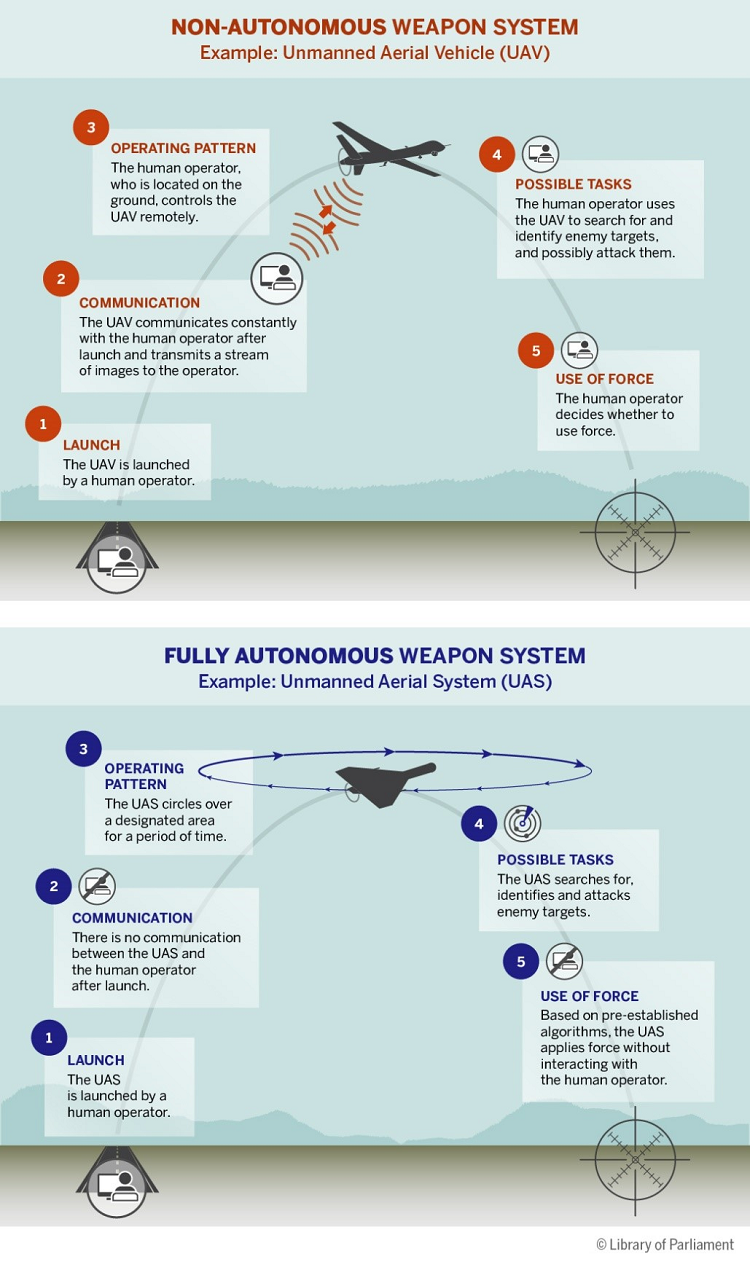

Figure 1 below compares five aspects of a non-autonomous weapon system with those of a fully autonomous weapon system.

Figure 1 – Non-Autonomous Weapon System vs. Fully Autonomous Weapon System

Source: Figure prepared by Library of Parliament based on information obtained from Paul Scharre, Army of None: Autonomous Weapons and the Future of War, W.W. Norton and Company, New York, 2018. Illustration of the fully autonomous weapon system based on IAI’s HARPY.

According to a number of observers, the potential benefits associated with the development of autonomous weapon systems generally fall into three categories: operational benefits that allow militaries to be more effective; economic benefits relating to the efficiency of resource allocation; and human benefits arising from the possibility that the use of such systems will reduce casualties in conflict situations.

According to a paper published by the Stockholm International Peace Research Institute,20 the operational benefits of autonomous weapon systems include speed – they can make decisions faster than human operators – and agility – they do not require constant communication with human operators in order to function and are therefore able to adapt more quickly. Furthermore, they may be more accurate than human operators, and are likely to have relatively greater endurance due to the cognitive and physical limitations of the human operator.

As well, because autonomous weapon systems do not need to communicate with a human operator, they may be better suited than traditional weapon systems to operate in remote environments, such as in the Arctic or at sea. Moreover, swarming technology could be used by military aircraft to protect against anti-aircraft missiles.

Finally, according to the University of Pennsylvania’s Michael C. Horowitz, autonomous weapon systems could make it easier for the military to operate more deeply in enemy territory. Such long-distance operations could mean fewer casualties and a reduced need for supply and communication networks.21

Despite high initial expenditures on research and development, autonomous weapon systems may have economic benefits through reducing the cost of military operations in various ways.

For example, with such systems, fewer service members may be needed to accomplish the same task, thereby allowing militaries to reallocate personnel and other resources to more complex tasks, and away from “dull, dirty or dangerous”22 jobs.

Autonomous weapon systems can also reduce personnel costs, as pointed out by the Fiscal Times’ David Francis. In 2013, the U.S. Department of Defense spent US$850,000 annually to equip and maintain a single soldier in Afghanistan; in that same year, a small armed robot cost US$230,000.23

Because their use might reduce the number of casualties, autonomous weapon systems could have human benefits. For example, militaries might replace human-operated fighter aircraft with such systems, thereby eliminating the risk to their pilots.24 As well, citing historical examples of mistakes and atrocities that have occurred in warfare, some commentators argue that autonomous weapon systems could reduce civilian casualties.25 Such systems would not attack in revenge, fear or anger, and would not miscalculate because of stress or fatigue.

In supporting the view that autonomous weapon systems might reduce casualties, the U.S.’s 2018 submission to the United Nations Group of Governmental Experts (GGE) states: “Automated target identification, tracking, selection, and engagement functions can allow weapons to strike military objectives more accurately and with less risk of collateral damage.”26

Despite the potential operational, economic and human benefits of autonomous weapon systems, some concerns exist about their development and use. One area of concern focuses on the potential for such systems to have a destabilizing effect on peace and security. Another area of concern relates to humanitarian issues arising from the use of these systems, while a third concern is linked to the potential failure of such systems.

Throughout history, some types of weapon systems have had more of a destabilizing effect on international relations than have others. Paul Scharre, Senior Fellow at the Center for a New American Security, concludes that “autonomy benefits both offense and defense; many nations already use defensive supervised autonomous weapons. But fully autonomous weapons would seem to benefit the offense more.”27 Some commentators believe that a state that has autonomous weapon systems could have an incentive to strike first, which could increase the incidence and severity of conflict.28 For example, a state that is considering a strike on a foreign target may be likelier to take this action if it has autonomous UAVs because the possibility of that state’s pilots being shot down is eliminated.29

Autonomous weapon systems might also be well suited to targeting and destroying nuclear weapons and their launch vehicles, thereby threatening the stability brought about by nuclear deterrence.30

As well, as autonomous weapon systems become smaller in size and less expensive to produce, they could become available to terrorist organizations.31 For instance, Daesh – also known as the Islamic State of Iraq and Syria, or ISIS/ISIL/Islamic State – has used commercially available, remote-controlled UAVs to drop grenades on U.S. forces in Iraq,32 and it and other terrorist organizations might also use autonomous weapon systems if this technology becomes less expensive.

United Nations Secretary-General António Guterres has characterized autonomous weapon systems as “morally repugnant,” and is among those who believe that the prospect of a machine determining who lives and who dies is unacceptable.33

In its 2018 report regarding autonomous weapon systems, Human Rights Watch argued that such systems are inherently illegal under international law because they contravene the “Martens Clause” of the 1977 protocols to the Geneva Conventions.34 This clause states that, in situations not explicitly covered by international law, civilians and combatants “remain under the protection and authority of the principles of international law derived from … the principles of humanity and from the dictates of human conscience.”35 Since an autonomous weapon system is not human, it is incapable of acting in accordance with the principles of humanity, including ethics, justice, dignity, respect and empathy. It may also be difficult to design an autonomous weapon system that respects the international laws of war, such as the prohibition on attacking soldiers who are surrendering.36

Writing on his own behalf, U.S. Army Major Daniel Sukman suggested that, if a large share of the U.S.’s overseas military operations were to be conducted using autonomous weapon systems, adversaries could find it more advantageous to attack the U.S. itself than to target the country’s overseas forces. In doing so, an adversary might destroy the systems’ network command centres. As well, attacking targets in the U.S., rather than those overseas, could increase the American public’s opposition to the conflict.37

Finally, if a “fire and forget” drone were to destroy a civilian building, rather than a military installation, it is unclear who would be responsible and thereby face consequences.

Despite the possibility that autonomous weapon systems could reduce casualties and accidents, no weapon system is immune to failure. There is no reason to believe that autonomous weapon systems will fail more or less often than non-autonomous weapon systems. However, the former systems are likely to fail in different ways than the latter systems. It may be difficult to predict what an autonomous weapon system will do and determine whether and why it has failed.38

Autonomous weapon systems are based on artificial intelligence that, at least at the early stages of development, involves choices made by humans. These choices and any inherent biases influence the algorithms that, for example, could be used to make targeting decisions in conflict situations, thereby influencing if a particular person lives or dies.39 Mines Action Canada’s Erin Hunt believes that autonomous weapon systems might make targeting decisions based on biases, with drastic consequences.40

Carleton University’s Stephanie Carvin argues that, with autonomous weapon systems’ unprecedented and unique levels of complexity, “normal accidents” – those that occur not because of a single fatal design flaw, but due to the interaction of complex parts in unforeseen circumstances – become increasingly likely.41 Even if such systems are tested rigorously, it is impossible to test every situation that they could face.

Moreover, an autonomous weapon system might not be able to recognize that it is failing. Human operators who make a mistake, such as with an incident of friendly fire, might recognize immediately that a mistake has occurred and change their course of action. However, an autonomous weapon system that fails in a certain way is likely to continue to operate in the same way and to make the same mistake until it is destroyed or reprogrammed.

Because of the potential for autonomous weapon systems to fail, adversaries may attempt to cause failures, including by exploiting systems’ vulnerabilities. As Paul Scharre explains, “once an adversary finds a vulnerability in an autonomous system, he or she is free to exploit it until a human realizes the vulnerability and either fixes the system or adapts its use. The system itself cannot adapt.”42

Thus, autonomous weapon systems may fail in ways that humans may not and thereby cause unintended fatalities or serious damage. The ways in which they fail may also be less acceptable from society’s perspective. However, such systems may also – on average – cause relatively fewer unintended fatalities and less damage than human-operated systems.

Various groups are advocating a full or partial ban on autonomous weapon systems. In 2016, the High Contracting Parties to the Convention on Certain Conventional Weapons – including Canada – established the GGE on lethal autonomous weapon systems. Under the auspices of the United Nations, the GGE provides a forum for states, academic bodies and non-governmental organizations to discuss best practices and to recommend a way for the international community to approach emerging military technologies, including autonomous weapon systems.43 The GGE met most recently in August 2019,44 and the High Contracting Parties met in November 2019.

Media sources suggest that no substantial progress towards a ban was made at the latter meeting.45

According to the Campaign to Stop Killer Robots, as of October 2019, 29 governments had called for international law to ban the development and use of fully autonomous weapon systems, and one government – China’s – had supported a ban on the use, but not on the development, of such systems.46 Despite the Prime Minister of Canada’s indication in December 2019 that Canada would work towards such a ban, the Government of Canada has not issued a formal statement supporting a ban, and is not included in above-mentioned 29 governments. As of that date, other governments – including those of Australia, France, Germany, Russia, the U.K. and the U.S. – were opposed to attempts to ban autonomous weapon systems.47

A number of countries opposed to a ban on autonomous weapon systems have nonetheless implemented self-imposed restrictions on their development. For example, through U.S. Department of Defense Directive 3000.09, the U.S. mandates that any such system shall be designed to “allow commanders and operators to exercise appropriate levels of human judgment over the use of force.”48 Additionally, in October 2019, the Defense Innovation Board established by the U.S. Secretary of Defense published a report that recommended five principles for the use of artificial intelligence by the U.S. military, including human responsibility, and avoiding bias and unintended harm.49 As well, the French Minister of the Armies has stated that France refuses to allow any machine operating without any human control to make decisions about life or death.50

Paul Scharre identifies a number of factors that he believes complicate efforts to ban autonomous weapon systems. In his view, these factors include the constantly evolving nature of the relevant technology, a lack of agreement about the definition of such systems and the difficulties that would be associated with verifying compliance with any international ban. He also notes steps that the international community could take as alternatives to a full ban on such systems, including a ban on antipersonnel autonomous weapon systems only or an international agreement establishing a general principle about the role of human judgment in armed conflict.51

While Canada is at the forefront of civilian artificial intelligence research,52 the country does not appear to be developing autonomous weapon systems. That said, through Defence Research and Development Canada, DND is researching topics of autonomy and artificial intelligence as they pertain to defence. In 2018, for example, DND sought proposals from the private and academic sectors to assist with its study on “seeking to promote revolutionary advances in our understanding of autonomous systems for defence and security applications.”53

The Government of Canada notes that “[t]he Canadian Armed Forces is committed to maintaining appropriate human involvement in the use of military capabilities that can exert lethal force.”54 At the United Nations General Assembly in 2017, Canada stated that a “deeper understanding”55 of lethal autonomous weapon systems is needed in the context of the Convention on Certain Conventional Weapons.

In December 2019, in a ministerial mandate letter, the Prime Minister of Canada instructed the Minister of Foreign Affairs to “advance international efforts to ban the development and use of fully autonomous weapons systems.”56 Prior to December 2019, the Government of Canada had not announced its position on a potential ban on such systems, and – in May 2018 – had stated that such a ban would be “premature, given that the technology does not currently exist.”57 Canada’s support for such a ban contrasts with the positions taken by some of the country’s allies, including France, the U.K. and the U.S.58

Despite the Government of Canada’s intention to work towards an international ban on autonomous weapon systems, the Canadian Armed Forces may one day face adversaries that have developed such systems. Given that autonomous weapon systems can make decisions much faster than humans, and can operate without the rest and resupply that are needed by human soldiers, facing such adversaries will require the Canadian Armed Forces to adapt to these changing circumstances.59

Around the world, research is continuing into autonomy in military systems. Rapid technological developments in this area increase the potential for advances to outpace evolution in military doctrine,60 diplomacy, political decision-making and jurisprudence.

A consensus is emerging that, in most circumstances, some level of human control must exist before lethal force is used, and that various elements of international law must apply to the use of all weapon systems, including those with varying degrees of autonomy.61 However, there is no consensus about whether, and – if so – how, such principles should be reflected in international law.

Finally, despite all efforts to anticipate developments in this area, the capabilities of autonomous weapon systems, the potential for them to fail and the ways that they are likely to change international relations may not be evident until they are used in a crisis or a situation involving conflict.

† Papers in the Library of Parliament’s In Brief series are short briefings on current issues. At times, they may serve as overviews, referring readers to more substantive sources published on the same topic. They are prepared by the Parliamentary Information and Research Service, which carries out research for and provides information and analysis to parliamentarians and Senate and House of Commons committees and parliamentary associations in an objective, impartial manner. [ Return to text ]

© Library of Parliament